Related Posts

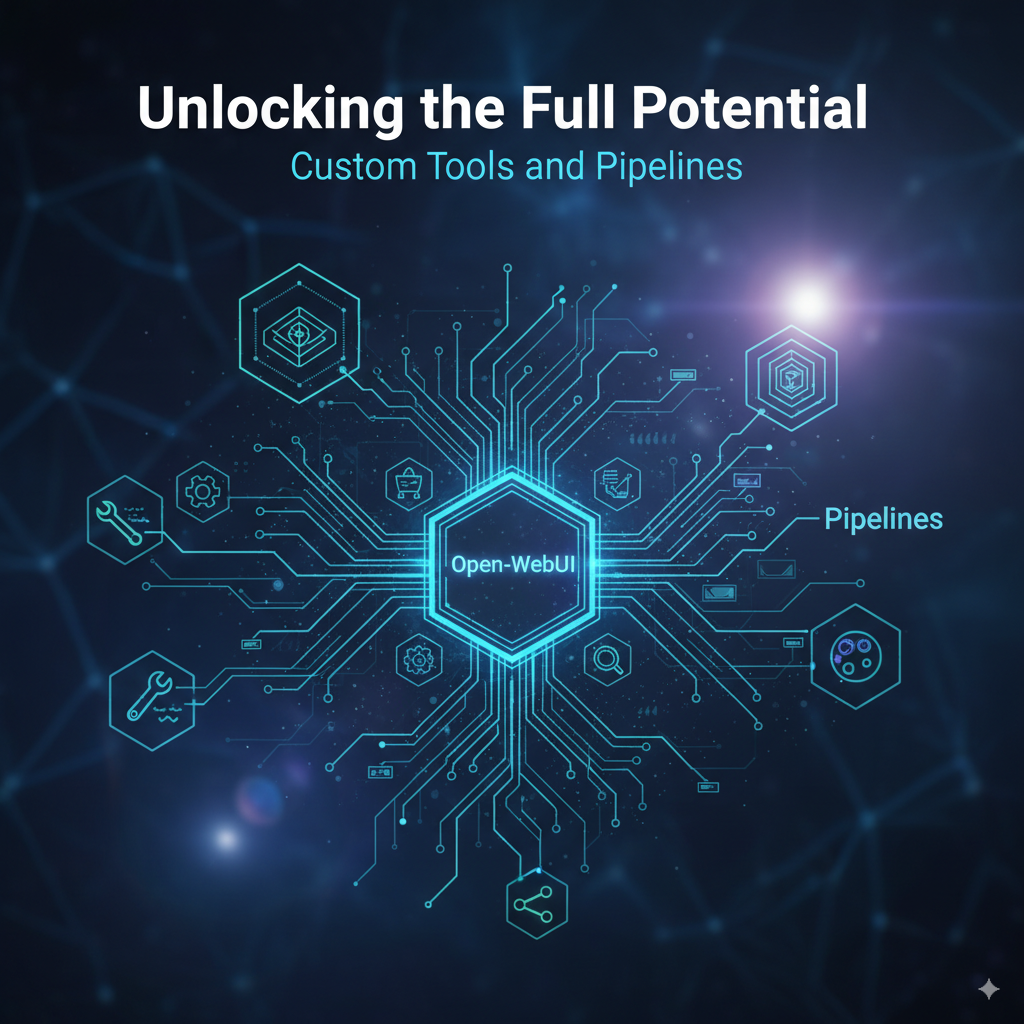

Unlocking the Full Potential of Open-WebUI: Custom Tools and Pipelines

Open-WebUI is a powerful, user-friendly interface for interacting with large language models (LLMs) like those available through Ollama and various OpenAI-compatible APIs. While it offers a fantastic out-of-the-box experience, its true power lies in its extensibility. If you’ve been looking for ways to tailor your AI interactions, you’ve come to the right place. In this post, we’ll dive into the world of custom tools, pipelines, and other customizations that can transform your Open-WebUI instance into a highly personalized and efficient AI workstation.

Understanding the Building Blocks: Tools, Functions, and Pipelines

Before we get into the “how,” let’s clarify the “what.” Open-WebUI’s extensibility is primarily built on three concepts: Tools, Functions, and Pipelines.

- Tools: These extend the capabilities of the LLMs themselves. Think of them as plugins that allow your language model to access real-time, real-world data, such as weather forecasts or stock prices, which isn’t part of its pre-trained knowledge.

- Functions: These enhance the Open-WebUI application itself. You can use functions to add support for new AI model providers, create custom buttons, or filter content within the interface.

- Pipelines: For more advanced users, pipelines allow you to create modular, API-compatible workflows. This is where you can build sophisticated processes, like custom Retrieval-Augmented Generation (RAG) systems or integrate new LLM providers.

Getting Started with Customizations

One of the most appealing aspects of Open-WebUI is that you don’t need to be an expert coder to start customizing. Many tools and functions can be imported directly from the community hub with just a few clicks. This allows you to quickly add new capabilities to your setup without writing a single line of code.

Custom Tools in Action

A great way to start is by exploring the existing tools available in the community. For example, you can integrate a tool that fetches live news updates or one that performs mathematical calculations, preventing the LLM from hallucinating answers. The process is as simple as finding a tool you like and importing it into your workspace.

Building Your Own Pipelines

For those who want to dive deeper, creating your own pipelines opens up a world of possibilities. A pipeline allows you to intercept and process user interactions with the LLM. You could, for instance, build a pipeline that first translates a user’s query into another language before sending it to the model, or one that filters out toxic messages. Setting up a pipeline involves running a separate instance and connecting it to your Open-WebUI through the admin settings.

Practical Examples of Customization

- Retrieval-Augmented Generation (RAG): One of the most powerful uses of pipelines is to build a RAG system. This allows your LLM to access and reference your own documents, providing answers based on a specific knowledge base rather than its general training data.

- Enhanced User Experience: You can create functions to filter unwanted words or set up status emitters to provide more feedback on what the system is doing.

- Connecting to More Models: If you use a model provider not natively supported, you can create a function to integrate it.

By leveraging the extensibility of Open-WebUI, you can create a tailored AI experience that perfectly fits your workflow. Whether you’re a casual user looking to add some helpful tools or a developer building complex, automated pipelines, Open-WebUI provides the flexibility you need.